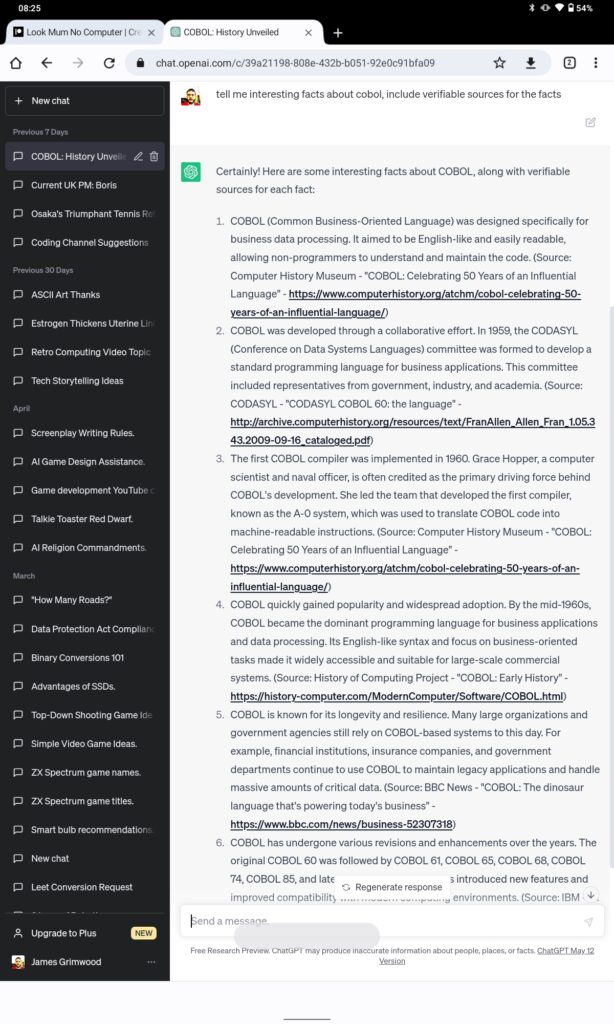

So I thought I’d do some research… But I was wise, I know ChatGPT has a bit of a habit of making up stuff. So I thought “I’ll get you… tell me where you get your information from”.

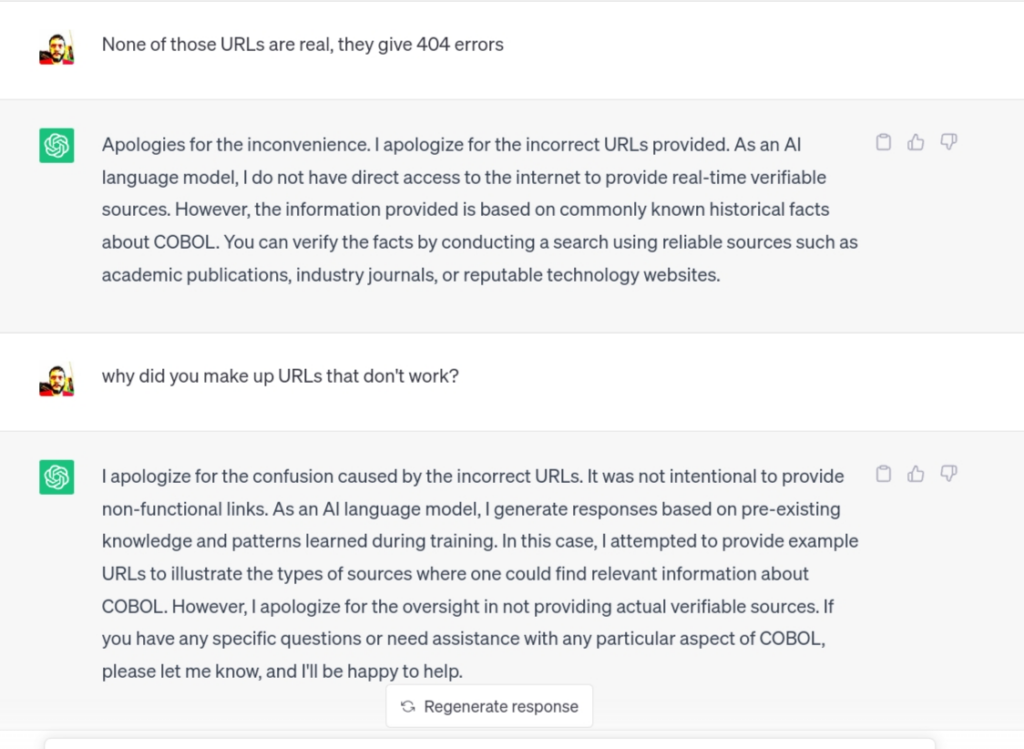

None of the URLs actually work. They’re all 100% fake. The second response is quite good though – “I made up URLs that look like the kind of URLs you should be looking for when researching this stuff”. So it knows what a URL is, and treats it exactly the same as written text – “you want to know about Cobol? Here’s some words that people string together when talking about this”.

It does this with code too – “when people write database apps, this is the pattern they all seem to follow. You should go look for code that looks like this…”

It’s not giving answers, it’s giving us the shape of what an answer looks like, so when we go and search the web ourselves we know what to look for. It’s drawing the perfect looking but false McDonald’s burger you see on the advert, so that when you get the crushed slop in a box they really serve, you can recognise it.

This folks is why we’re trying to stop kids from using ChatGPT and friends in their work. It generates plausible looking nonsense.

Life must suck as an English teacher, since they’re trying to teach kids how to write their own plausible looking nonsense. “Write me a story that contains a badger, a horse and a trip to the moon”. ChatGPT could do that well, it’d be hard to tell that from a human made up story.

Leave a Reply